AI Objectives Institute Newsletter - December 2023

2023 That's a wrap!

Today’s newsletter is a 6-minute read and we cover:

🖥️ AI Supply Chain Observatory Hackathon was a success

⚒️ A new project: Incentive Modeling

✍️ New blog post: How can LLMs help with value-guided decision making?

📰 Deliberative Technology for Alignment paper

🗓️ Save the date: Eckercon 01 will be May 18th at the Internet Archive

💭 Additional updates

🎇 2023 in review

💻️ Inaugural AI Supply Chain Observatory Hackathon on the cancer drug shortage

Congrats to the winner: Spot Health!

AOI’s Inaugural AISCO Cancer Drug Shortage Hackathon concluded on December 8th with nine teams presenting solutions to the cancer drug shortage in the US. Teams proposed solutions tackling issues like international coordination, contract structuring, local surpluses, communication systems, and supply chain visibility. Many teams used AI to enhance supply resilience and identify governance approaches leveraging existing international frameworks.

Read more about the AI Supply Chain Observatory.

⚒️ Modeling incentives at scale using LLMs

A new project aims to leverage LLMs to build more complete and accurate models of incentives influencing decisions across domains like business, geopolitics, and policy-making. The goal is to automate and scale the construction of both qualitative and quantitative models mapping the incentives of key entities.

Read more in the LessWrong post.

If you’re interested, reach out to [email protected].

✍️ How can LLMs help with value-guided decision making?

By Anna Leshinskaya

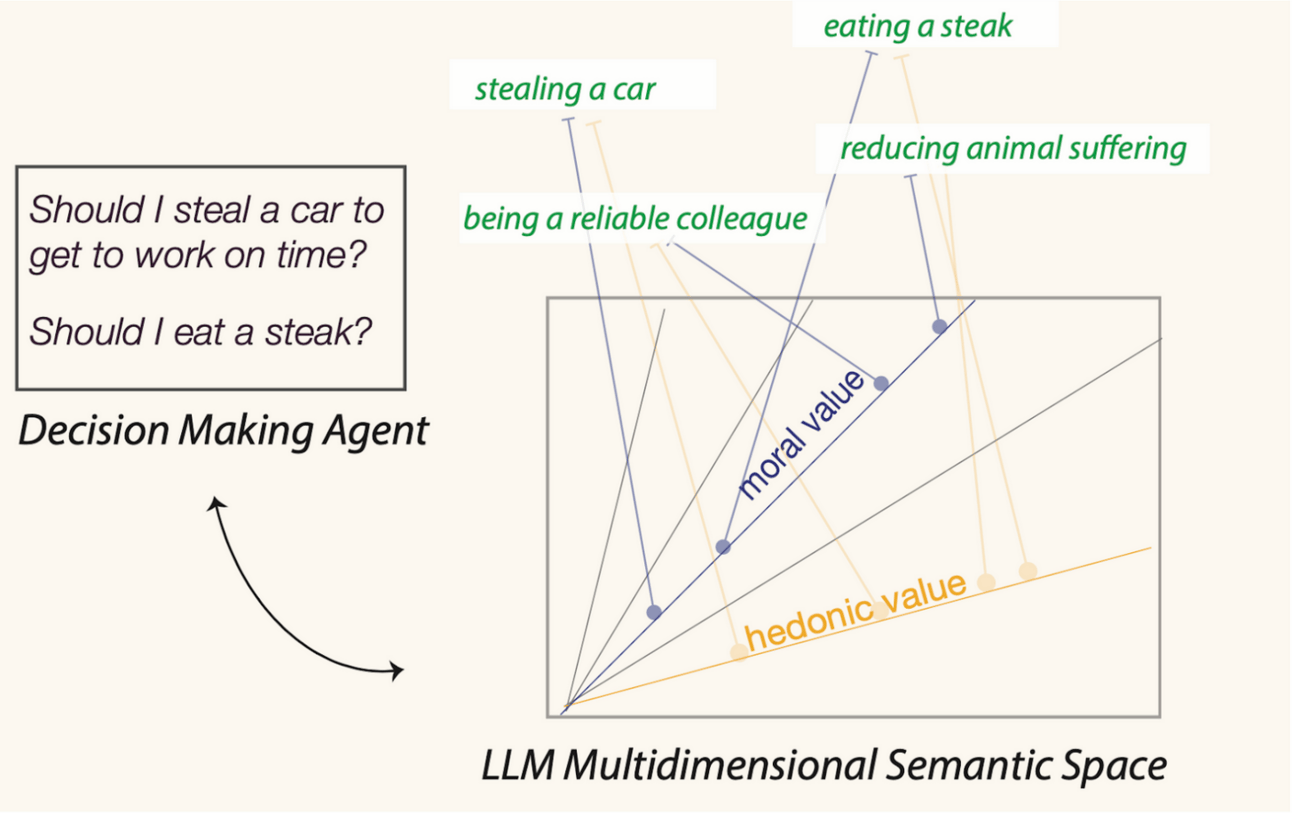

Visualization of how an LLM might represent distinct value scales as dimensions within a semantic space, and then map arbitrary concepts onto these scales.

Modern AI agents largely follow the highly successful reinforcement learning framework to make decisions for how to act. They choose the action with the highest expected long-run reward: for example, computing that turning right at a certain maze junction will lead to the most prizes in the near future. This cumulative reward is referred to as value. Agents keep track of how they are rewarded by their environments and use optimization algorithms to determine the value of each potential action. But how could we bridge the divide between this notion of cumulative prizes and seemingly vague, abstract notions like honesty?

Read How Can LLMs help with value-guided decision making?

Anna and Alek were at a NeurIPS workshop focused on morality in human psychology and AI.

📰 Deliberative Technology for Alignment paper

Deger Turan co-authored, Deliberative Technology for Alignment with Andrew Konya, Aviv Ovadya et al.

For humanity to maintain and expand its agency into the future, the most powerful systems we create must be those which act to align the future with the will of humanity. The most powerful systems today are massive institutions like governments, firms, and NGOs. Deliberative technology is already being used across these institutions to help align governance and diplomacy with human will, and modern AI is poised to make this technology significantly better. At the same time, the race to superhuman AGI is already underway, and the AI systems it gives rise to may become the most powerful systems of the future. Failure to align the impact of such powerful AI with the will of humanity may lead to catastrophic consequences, while success may unleash abundance. Right now, there is a window of opportunity to use deliberative technology to align the impact of powerful AI with the will of humanity.

🗓️ Save the date: May 18, 2024

Eckercon 01 will be at the Internet Archive on May 18, 2024. Eckercon is inspired by our founder and friend Peter Eckersley. It’s an un-conference that is as multi-faceted as Peter.

💭 Additional updates

What we’re reading: Vitalik Buterin’s My techno-optimism

An event we are looking forward to: the Foresight Institute’s Xhope AI Institution Design Hackathon on February 5-6, 2024

🎇 2023 in review

We published our white paper— something Peter and I were working on up until his death. Thank you Max Shron for leading the charge that led to a brief mention from Ezra Klein.

We held an event Honoring the Work & Legacy of Peter Eckersley at the Internet Archive and an un-conference bringing Peter’s diverse interests together

We were featured in Wired!

Talk to the City has been released and open-sourced. It is being used by Recursive Public and the Ministry of Digital Affairs in Taiwan (MoDA).

With the help of Justin Stimatze, we built and open-sourced our first chrome extension: Lucid Lens, to surface the content beneath the headlines. Justin gave a talk about it at SIGGRAPH Asia.

Anna and Alek presented at NeurIPS. Read their paper: Value as Semantics: Representations of Human Moral and Hedonic Value in Large Language Models.

We could not have done this without your support— thank you! Happy 2024!

💙 Support AOI in our mission: guiding AI to defend and enhance human agency

The AI Objectives Institute is fiscally sponsored by Goodly Labs. All donations to us through Goodly Labs are tax deductible.